Deep Reverse Tone Mapping

Yuki Endo, Yoshihiro Kanamori, and Jun Mitani

University of Tsukuba

.jpg)

Abstract:

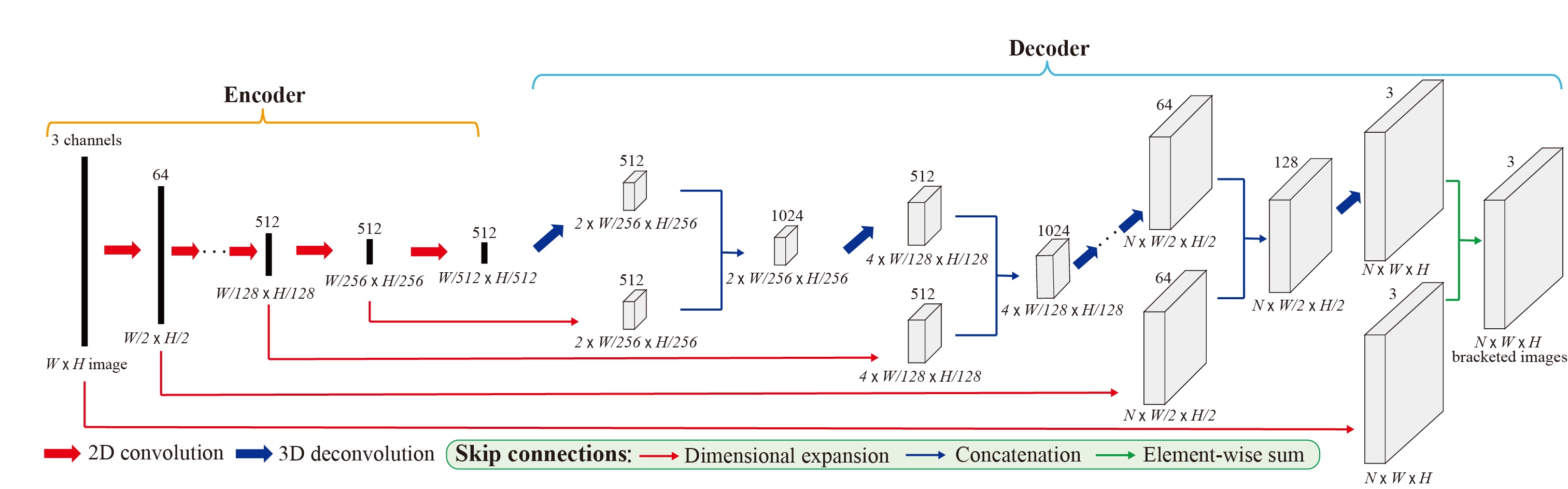

Inferring a high dynamic range (HDR) image from a single low dynamic range (LDR) input is an ill-posed problem where we must compensate lost data caused by under-/over-exposure and color quantization. To tackle this, we propose the first deep-learning-based approach for fully automatic inference using convolutional neural networks. Because a naive way of directly inferring a 32-bit HDR image from an 8-bit LDR image is intractable due to the difficulty of training, we take an indirect approach; the key idea of our method is to synthesize LDR images taken with different exposures (i.e., bracketed images) based on supervised learning, and then reconstruct an HDR image by merging them. By learning the relative changes of pixel values due to increased/decreased exposures using 3D deconvolutional networks, our method can reproduce not only natural tones without introducing visible noise but also the colors of saturated pixels. We demonstrate the effectiveness of our method by comparing our results not only with those of conventional methods but also with ground-truth HDR images.

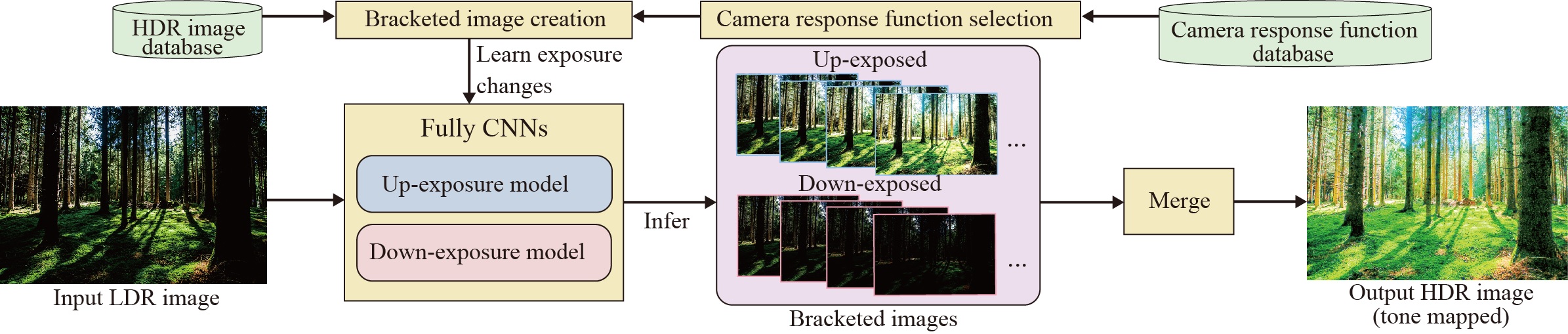

Overview

We train 3D convolutional neural networks in order to infer bracketed LDR images from a single LDR image. The inferred LDR images are then merged to generate an HDR image.